I’ve always been fascinated by quantum computing, it’s like something out of a sci-fi movie I’d watch as a kid. These machines are already cracking problems that would leave even the largest supercomputers sweating for thousands of years. It's a truly remarkable advancement. But how did we arrive at this point? What principles underpin these groundbreaking devices, and why are tech giants investing so heavily in their development? Driven by my own intense curiosity, and after countless hours exploring discussions by leading experts, let's embark on a journey into this fascinating realm where the boundaries of physics and computer science converge.

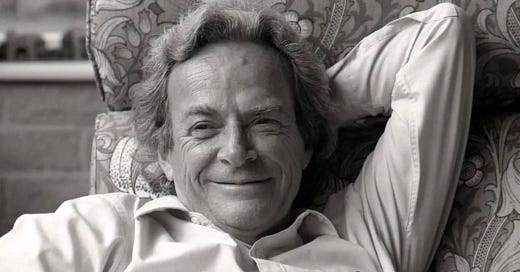

The Genesis: Feynman's Quantum Insight – "Nature isn't classical!"

In May 1981, at a conference titled "Simulating Physics with Computers." Richard Feynman, a physicist with a brain buzzing with energy, dropped a bombshell that flipped computing on its head: "Nature isn't classical," Feynman asserted, with his characteristic spark. His point was elegantly simple: our traditional computers are inherently limited when it comes to modeling the intricacies of quantum mechanics. However, Feynman wasn't merely pointing out a limitation; he was proposing a radical alternative, a concept that bordered on quantum ingenuity: "Could we construct a new kind of computer? A quantum computer?"

Feynman's proposition wasn't a spur-of-the-moment idea. He had been contemplating the future of computing for years. As far back as 1959, in his renowned lecture "There's Plenty of Room at the Bottom," he was already envisioning the miniaturization of circuits to the atomic scale, essentially predicting nanotechnology before the term even existed. Feynman was demonstrably ahead of his time.

His insight stemmed from the realization that classical computers would inevitably encounter an insurmountable barrier when attempting to simulate quantum phenomena. The mathematical complexity escalates exponentially with each additional particle in a quantum system. It was this challenge that sparked his revolutionary idea: what if we could harness the principles of quantum mechanics itself for computation? It's akin to using fire to understand fire; a conceptually simple yet profoundly innovative approach.

Quantum mechanics are weird, even today, we lack a universally accepted interpretation. Think of the electron: rather than orbiting the nucleus in a defined path, it exists as a probability cloud, effectively present in multiple locations simultaneously, weighted by probabilities for each position. Superposition is like a coin spinning in the air: it’s heads and tails at the same time until it lands. And entanglement? Picture two best friends who always know what the other’s thinking, no matter how far apart they are. These very properties of superposition and entanglement, fundamental to quantum particles, render the simulation of quantum states on classical computers impossible. This behaviour, however, is confined to the quantum realm, absent from our everyday macroscopic experience. To genuinely simulate quantum phenomena, we require a quantum entity that can be observed and manipulated, allowing us to assemble millions of them into a computer. This entity we call the qubit, drawing an analogy to the bits of classical computing.

A qubit, therefore, is any physical system capable of existing in superposition and becoming entangled with other qubits. The challenge lies in the extreme sensitivity of quantum objects to their environment. Minute temperature fluctuations, air molecules, even passing neutrinos; any form of "noise" can disrupt superposition or entanglement, causing "decoherence" and effectively erasing the quantum information held within the qubit.

Quantum Error Correction: Taming Quantum Instability

If the promise of quantum computing seems almost too good to be true, skepticism is warranted. Quantum states are inherently fragile. Imagine balancing a pencil on its tip during an earthquake; this illustrates the level of difficulty. Even the slightest external disturbance can induce decoherence, causing quantum information to vanish.

This is where quantum error correction emerges as a critical solution, the often-unheralded hero of the quantum narrative.

A significant breakthrough was the development of the Shor code, distinct from Shor's algorithm. This ingenious technique enables the detection and correction of errors that would otherwise cripple quantum computations. Without delving into excessive technical details, the Shor code effectively distributes a single "logical" qubit across multiple "physical" qubits. Visualize it as employing backup dancers: if one falters, the others maintain the rhythm. This redundancy allows for error detection and correction before a quantum calculation derails.

Consider this analogy: instead of a single tightrope walker carrying delicate quantum data, vulnerable to the slightest breeze, imagine a team of walkers moving in perfect synchronicity. Should one falter, the others provide support, ensuring the integrity of the performance (the quantum data) remains intact. It's essentially a backup for your backup.

Heather West, a research manager for quantum computing at IDC, aptly states, "Error correction is the crucial puzzle piece. It's the necessary solution for quantum systems to scale and address real-world problems." Her assessment is accurate. Until robust error correction is achieved, quantum computers will largely remain confined to small-scale experiments, intriguing but not yet transformative.

Quantum Architectures: Diverse Approaches to the Future

But stabilizing qubits is only half the battle. Now, let’s check out the different ways people are building these quantum computers:

Superconducting qubits currently represent a leading approach, utilized by companies like IBM and Google. These qubits operate at extremely low temperatures, colder than outer space, and exhibit reasonable stability. They are, in a sense, the current standard, similar to the dominance of Intel and AMD chips in classical computing. While not without limitations, they have yielded the most impressive results to date.

Photonic quantum computing employs photons, particles of light, as qubits, as seen in systems like China's Jiuzhang. A potential advantage is the possibility of room-temperature operation and integration with existing fiber optic networks. The challenge, however, lies in the extreme difficulty of controlling individual photons – akin to managing individual water droplets within a waterfall.

Diamond-based quantum systems, championed by researchers like Marcus Doherty from Quantum Brilliance, offer the prospect of room-temperature quantum computing, eliminating the need for extensive cooling systems. This could significantly enhance accessibility and reduce the energy footprint of quantum computing, potentially paving the way for quantum laptops rather than room-sized installations.

Topological qubits represent Microsoft's significant investment. Theoretically, these qubits possess inherent stability due to their topological structure. This is a higher-risk, higher-reward strategy, comparable to bypassing incremental improvements and directly pursuing a revolutionary leap in technology.

The Quantum Race: Tech Giants Enter the Arena

So, who’s in on this quantum party? Big tech’s all over it: think of it as a global race where the prize is a computer that can outsmart time itself.

IBM has been a consistent presence in the quantum computing field, steadily developing a strong foundation in superconducting qubit technology. They have progressively pushed performance boundaries with processors like the 127-qubit Eagle, followed by the 433-qubit Osprey, and now the impressive 1,121-qubit Condor.

IBM's approach is notable for its holistic perspective. Beyond merely increasing qubit counts, they are focused on establishing a comprehensive quantum computing ecosystem. Their Qiskit open-source platform is a significant contribution, enabling researchers and developers worldwide to engage with quantum algorithms.

Their long-term vision is ambitious. IBM aims to launch its first "quantum-centric supercomputer" by 2025, incorporating over 4,000 interconnected qubits. By 2033, they envision systems capable of performing a billion quantum gates, a milestone that could truly unlock the transformative potential of quantum computing.

Google generated considerable attention in 2019 by claiming "quantum supremacy." Utilizing their 53-qubit Sycamore processor, they asserted that they had solved a problem in 200 seconds that would require the fastest supercomputer 10,000 years. This was a bold assertion.

More recently, Google unveiled their Willow chip, featuring 105 superconducting qubits. The claim is that it can perform calculations in under five minutes that would take classical supercomputers an astonishing "10 septillion years" – a number exceeding the age of the universe. While such claims warrant careful evaluation, the progress is undeniably remarkable.

Google's current focus appears to be strongly directed towards error correction, and Willow seems to represent a substantial advancement in this crucial area.

Microsoft is pursuing a distinct strategy, focusing on "topological" quantum computing. They recently announced their Majorana 1 chip. Some analysts believe this approach could offer a significant advantage, as Microsoft has engineered a unique state of matter to create qubits that are inherently more stable.

This is analogous to developing a puncture-proof tire rather than improving puncture repair methods, a fundamentally different approach. However, skepticism exists within the scientific community, as the Majorana announcement lacked accompanying peer-reviewed publications to substantiate the claims.

Amazon, a more recent entrant into quantum hardware, has introduced their "Ocelot" processor. While newer to chip fabrication, Amazon's cloud computing expertise and vast resources position them as a serious contender in the field. Their potential should not be underestimated.

The quantum race is not solely a Western endeavor. Asian nations are also making significant strides.

China has demonstrated considerable quantum capabilities, showcasing two quantum computers that have achieved "quantum supremacy" in different ways. Jiuzhang, employing photons, can perform calculations in 200 seconds that are estimated to take a classical computer approximately 2.5 billion years. Meanwhile, Zuchongzhi, utilizing superconducting qubits similar to Google and IBM, is reported to solve problems in 1.2 hours that would require traditional systems 8 billion years. These figures are substantial.

Japan has adopted a strategic approach to integrate quantum technology across key industries, aiming for 10 million users by 2030. This proactive strategy is likely to stimulate further development in quantum hardware and foster innovation in quantum software and services, with the ambition of making quantum technology an integral part of everyday life.

Looking to the Future: The Quantum Horizon

The quantum landscape is evolving rapidly, but what lies ahead?

"It is important to remember that this is a marathon, not a sprint," cautions Heather West at IDC. "There is still a long path ahead." Her perspective is insightful.

Around 2025-2026, we may begin to see the emergence of truly practical quantum computers. IBM's goals include systems with over 4,000 qubits and circuits with up to 7,500 gates. These machines will not replace personal devices, but they could begin to tackle currently intractable problems in fields such as materials science, drug discovery, and finance. For example, quantum computers could aid in designing novel catalysts for carbon capture, accelerating the development of sustainable technologies.

The Asia Pacific region is projected to experience significant growth in quantum computing, potentially around 38% annually through 2032. Countries like Singapore are implementing national quantum strategies, and India is investing in quantum communication networks.

As error correction improves and qubit counts increase, we will reach critical thresholds that unlock entirely new possibilities. The telecommunications industry is already seeing early indications; NTT DOCOMO and D-Wave Quantum have observed a 15% improvement in mobile network congestion using quantum optimization. This is just a glimpse of the transformative potential to come.

Conclusion: Embracing the Quantum Era

From Feynman's insightful lecture in 1981 to today's global race, quantum computing has transitioned from a physics curiosity to a seemingly inevitable technological force. While quantum computers are not yet addressing everyday problems, the rapid pace of progress suggests that real-world applications are approaching.

Significant challenges remain, particularly in error correction and scaling, but the potential rewards are equally immense. From revolutionizing medicine to potentially breaking and reforming encryption, to optimizing complex systems, quantum computing promises to reshape industries and unlock possibilities that are currently difficult to fully grasp.

The quantum future’s coming fast, built one quirky qubit at a time. I can’t wait to see what crazy stuff it unlocks next, can you?. And if it seems reminiscent of science fiction, consider that smartphones were once equally fantastical.